This is an experimental animation project rendered in real-time using the Unity game engine. This page discusses the process regarding the development of it.

Concept

This project focuses on the animation created by game AI. A previous, smaller scale project was utilized as the base concept, which I will describe below.

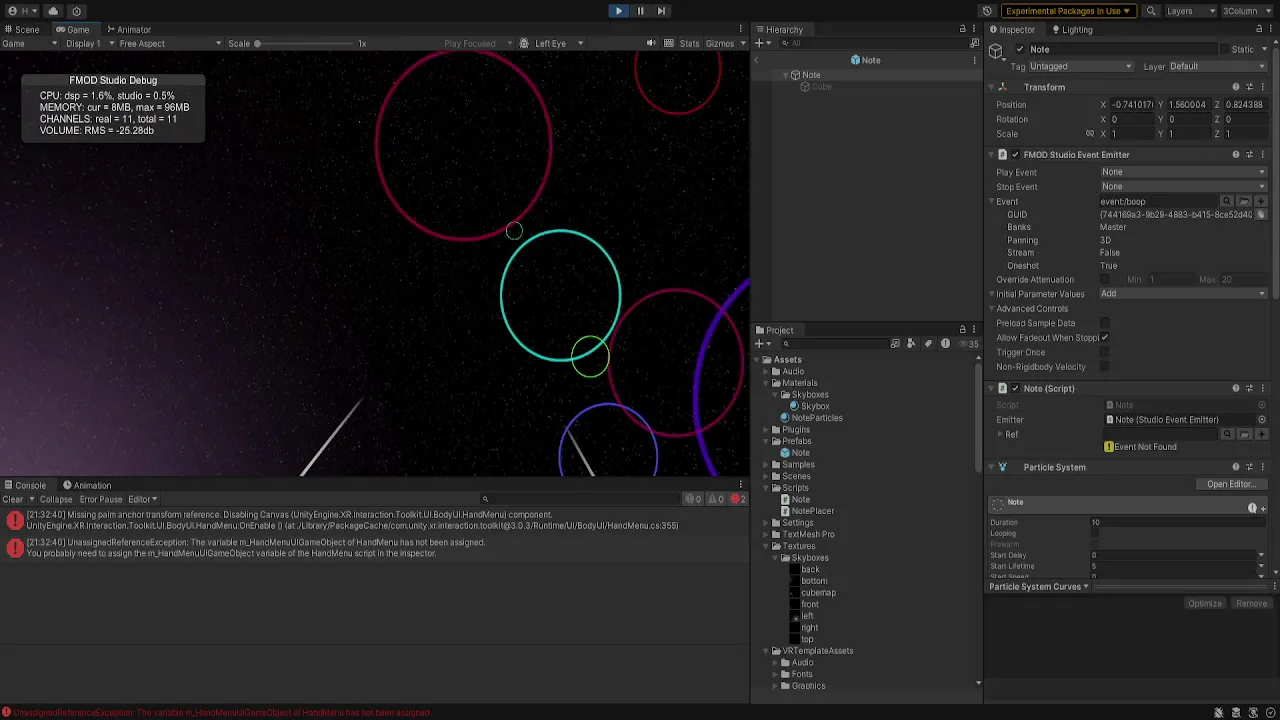

During the idealization process of my masters’ thesis, I made the experiment of creating a small audiovisual project in virtual reality within Unity. While that experiment was not used for the final thesis written, the experiment did serve as a point of reference for this project.

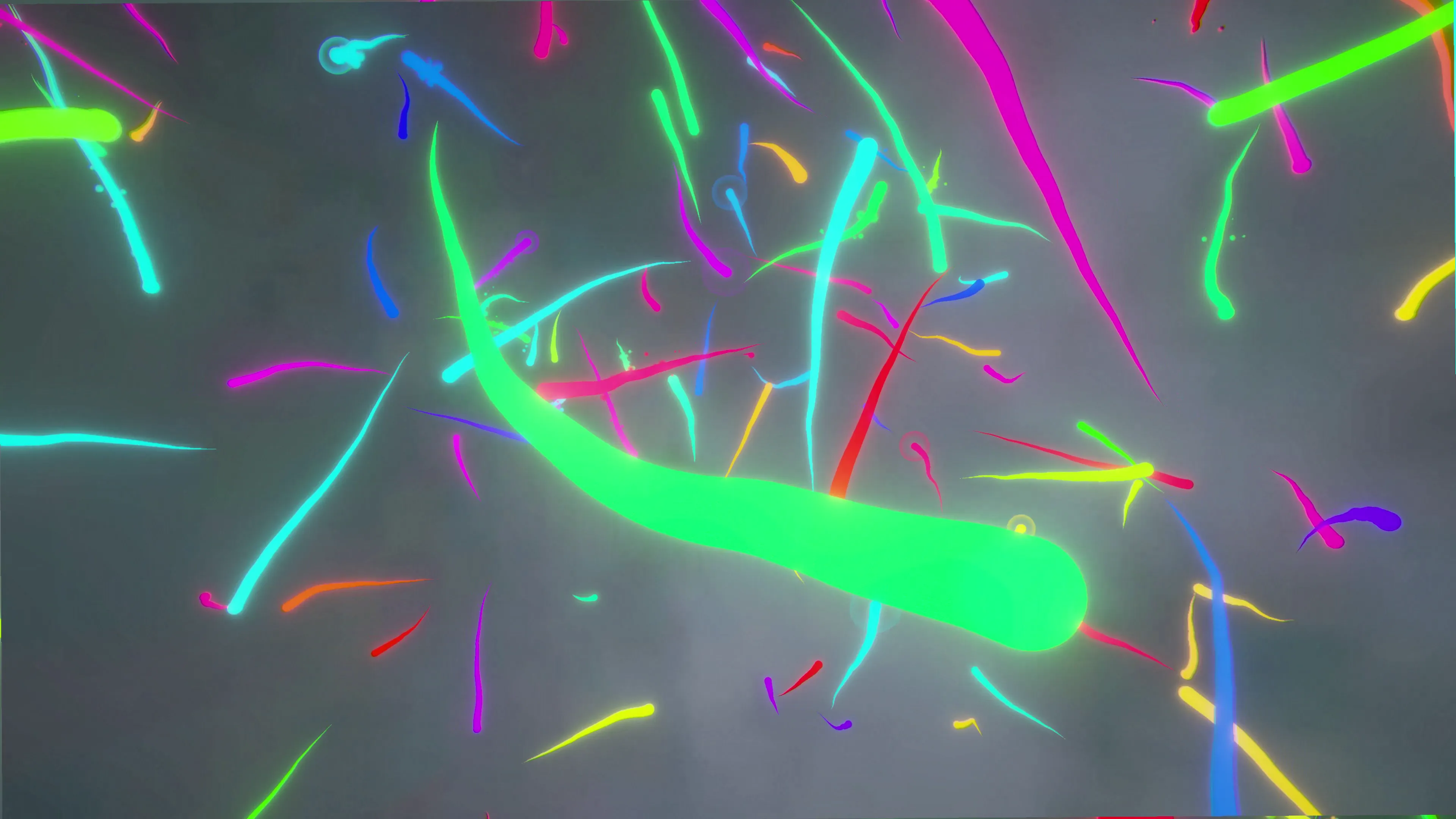

Within the experiment, the buttons on the user’s VR controls each correspond to a note. When the user presses the button, that note plays and an accompanying visual appears as a result. A visual can be seen in Figure 1.

While it was created prior to being aware of Fishinger’s works, the two share quite a good number of similarities, as both are audiovisual works.

Overall, the intention of the work is to explore interactions between game AI agents, and the soundscape that these agents can create.

Research

Oskar Fischinger

Oskar Fischinger was an abstract animator who lived between 1941-2004 (Thompson, 2005). While he has worked on a great number of animations, the particular work that prompted my further exploration is An Optical Poem, a music-driven experience. The visual on screen was influenced by what is heard through speakers, as if reacting to each playing instrument.

I was further inspired by Google Doodle’s (Oskar Fischinger’s 117th Birthday Doodle - Google Doodles, 2017) celebration of Fishinger. Their work involves programming short music patterns with notes, which then produces various shapes as those notes are played. Figure 3 shows a screenshot of the Doodle in action.

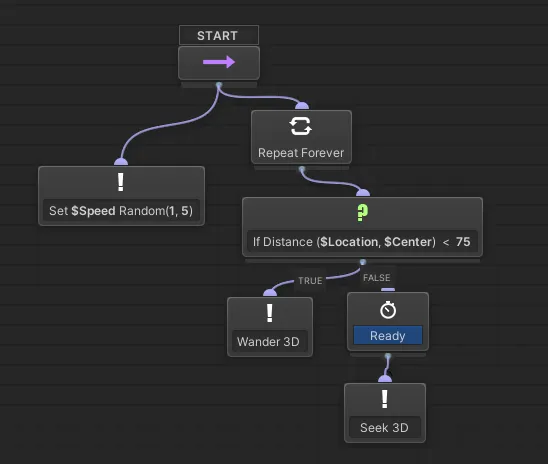

Behavior Trees

While it is not used extensively in my project, Behavior Trees still does see some use. Behavior Trees are a common technique for building game AI (Champandard & Dunstan, n.d.). They can be used to build complex interactions from simple behaviors.

Steering Behaviors

In games, autonomous characters may end up becoming a necessity as part of the core game design, as their ability to move around can make the game experience livelier. Steering behaviors is one of the parts that make up motion behaviors, and it is responsible for path determination (Kallmann, 1999). Where there are a number of different steering behaviors that was written for this project, only two key behaviors were utilized in the final animation: seek and wander.

Seek aims to redirect an agent such that it turns and moves towards a destination. Wander works similarly to Seek, where the agent seeks to a randomized location slightly ahead of the agent—this randomized position changes every frame.

Music, the Pentatonic Scale, and ADSR

A common conflict with music (but taken advantage of in certain genres of music) is the unnatural clash of frequencies; certain chords can produce dissonant sounds in the standard eight note major/minor scales ( Cazden, 1945). This is in contrast to the Pentatonic scale, which consists of five notes in a scale. Chords formulated by the pentatonic scale is more devoid of dissonant clashing of sounds compared to eight note scales.

An ADSR envelope is commonly used in sound synthesis. ADSR (Attack, Decay, Sustain, Release) is used to describe the changes of a sound over time (ADSR Explained, 2023). Many synthesizers allow manipulating any parameter using an ADSR envelope, from volume, to pitch, and even sound effects. Attack describes the amount of time until the parameter reaches the maximum value. Decay is the amount of time until the parameter reaches the sustain value. Release is the amount of time from the sustain value until the parameter reaches 0.

Methods

Code Implementation

The project was implemented in Unity, version 2022.3. Besides the default tool sets that Unity provides, I am also utilizing NodeCanvas, which provides support for building Behavior Trees in a visual graph environment.

First, I begin by spawning 200 Agents, which drive each moving particle in the world, in an invisible sphere. All agents generate with random colors. They are also driven by NodeCanvas Behavior Trees. They begin with a random speed, and begin wandering around. When they travel too far away, they redirect and seek until they re-enter the invisible sphere, then continues wandering around. The Behavior Tree graph is shown in Figure 4.

The code for the Wander and Seek behaviors are given in Listing 1 and Listing 2, respectively. The Seek code makes use of a UseTarget parameter, which allows customizing for a specific position or a moving target.

Vector3 direction = agent.velocity.normalized;

Vector3 target = agent.position + direction * SphereOffset;

target += Random.onUnitSphere * SphereRadius;

Vector3 desired = (target) - agent.position;

if (desired.sqrMagnitude > 0)

{

Vector3 steer = desired.normalized * MaxSpeed.value;

steer -= agent.velocity;

agent.velocity += steer;

}

Vector3 targetPos = UseTarget ? Target.position : TargetPosition;

Vector3 dif = targetPos - agent.position;

float distance = dif.magnitude;

if (distance > 0)

{

Vector3 steer = dif.normalized* MaxSpeed.value;

steer -= agent.velocity;

agent.velocity += steer / Mass;

}Audio Samples

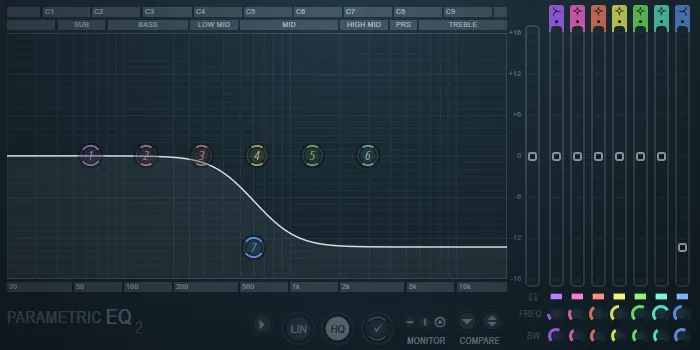

Audio samples were created in the FL Studio Digital Audio Workstation. Three sound generators were used: Glaze (by Native Instruments), which provides vocal-like synthesis, Vital (by Vital Audio), a wavetable synthesizer, and FLEX (by Image-Line), a sample preset synthesizer.

Every note generated is in the C Pentatonic scale. These consists of the notes C (Do), D (Re), E (Mi), G (So), and A (La).

Additionally, background audio was created as well. It utilizes simple synthesized chords and only serves as a foundation and to reduce dead air in the soundscape. The sounds were custom-designed in the Vital synthesizer, and further filtered using Fruity Parametric EQ 2 to adjust the volume of certain audio frequencies. The settings used are provided in Figure 5.

Effects

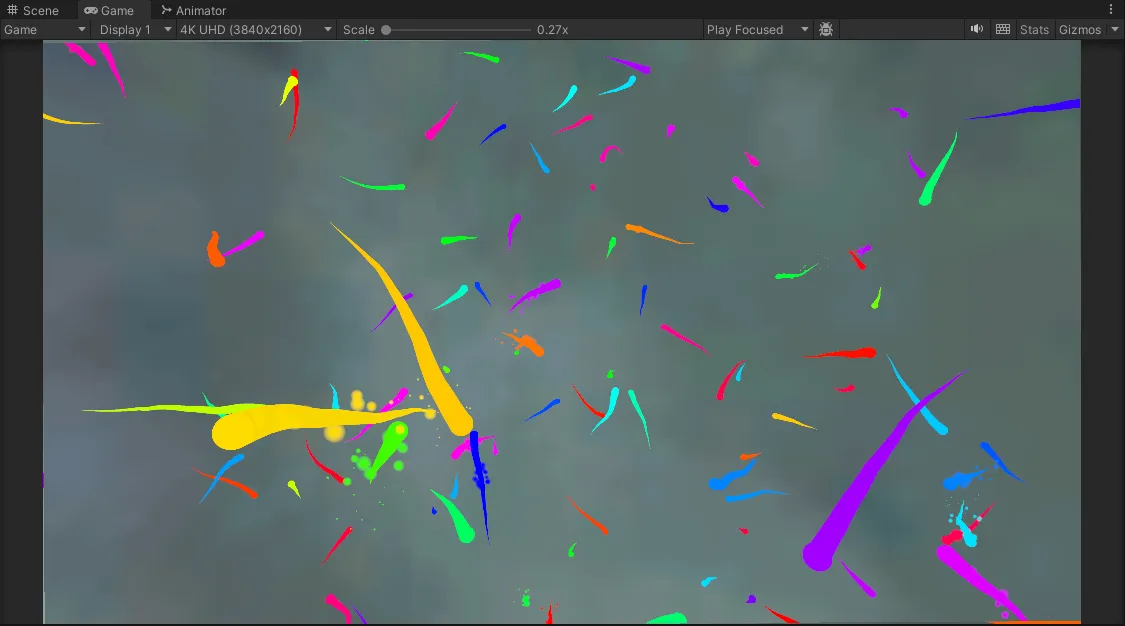

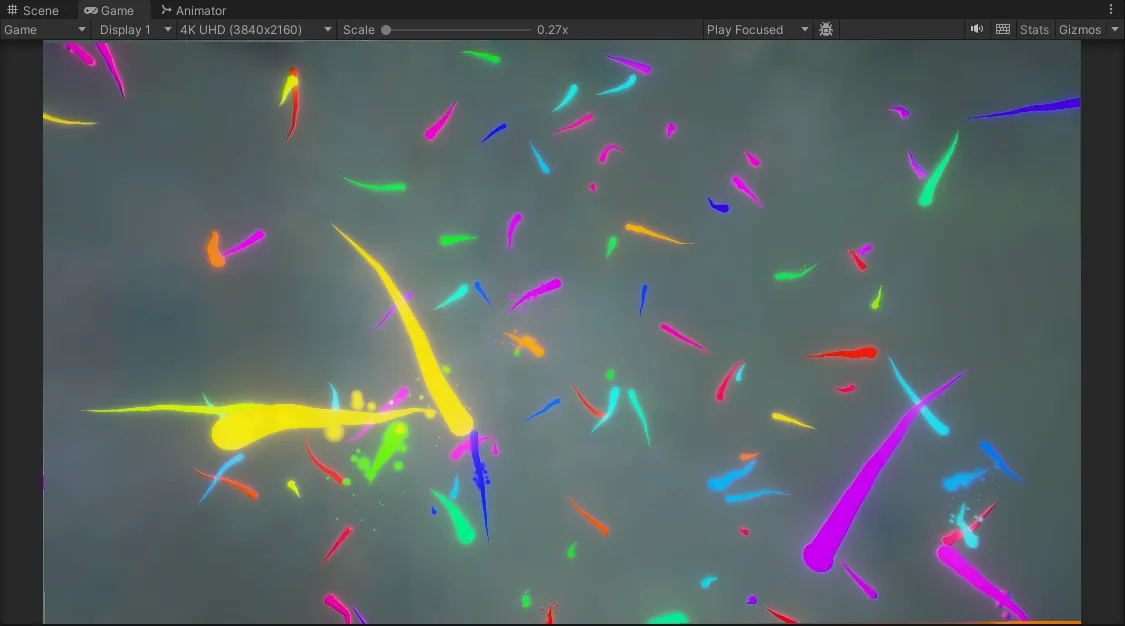

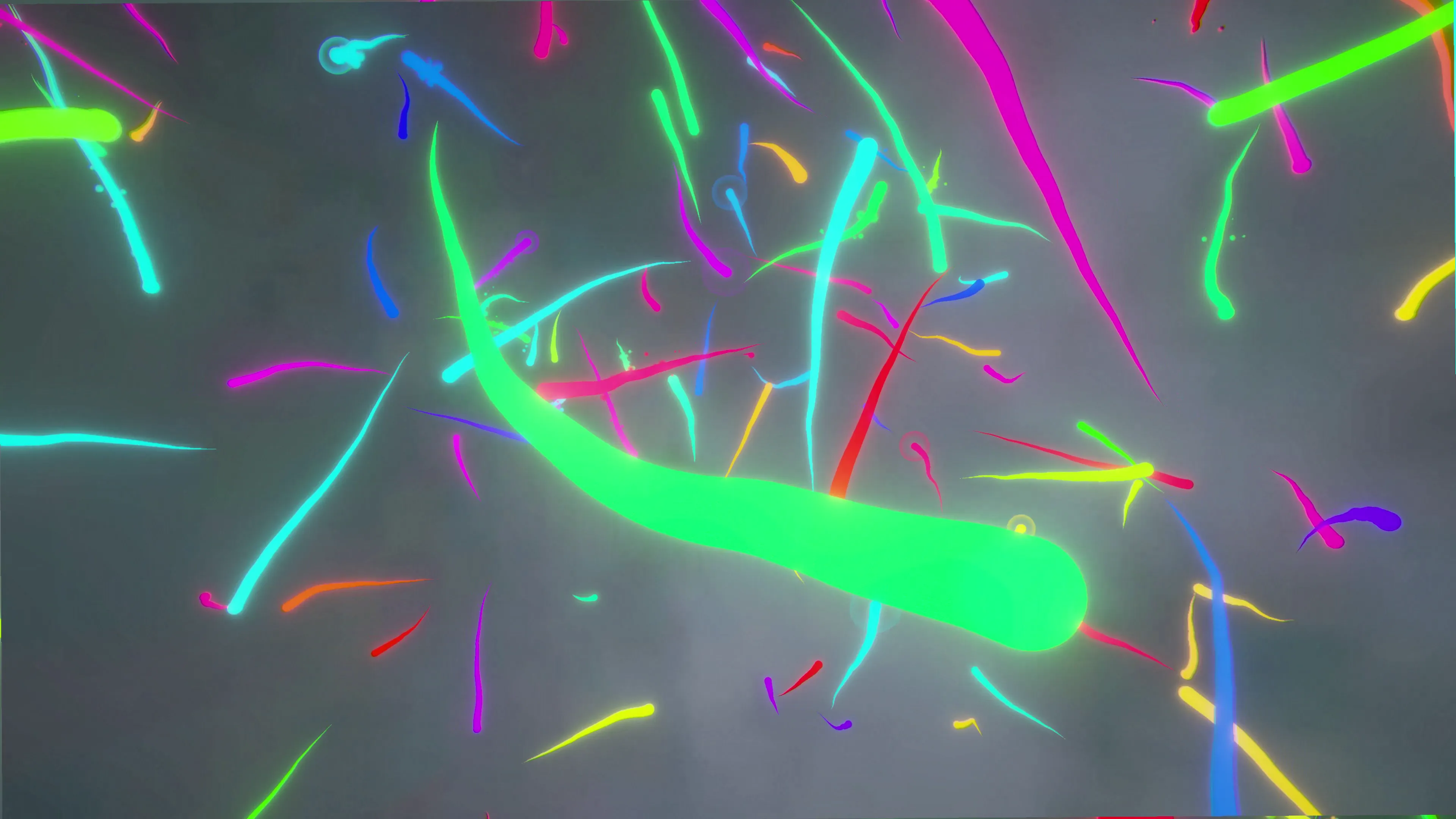

As the agent moves, trail appears behind the agent. This helps visualize the path that the agent travels in.

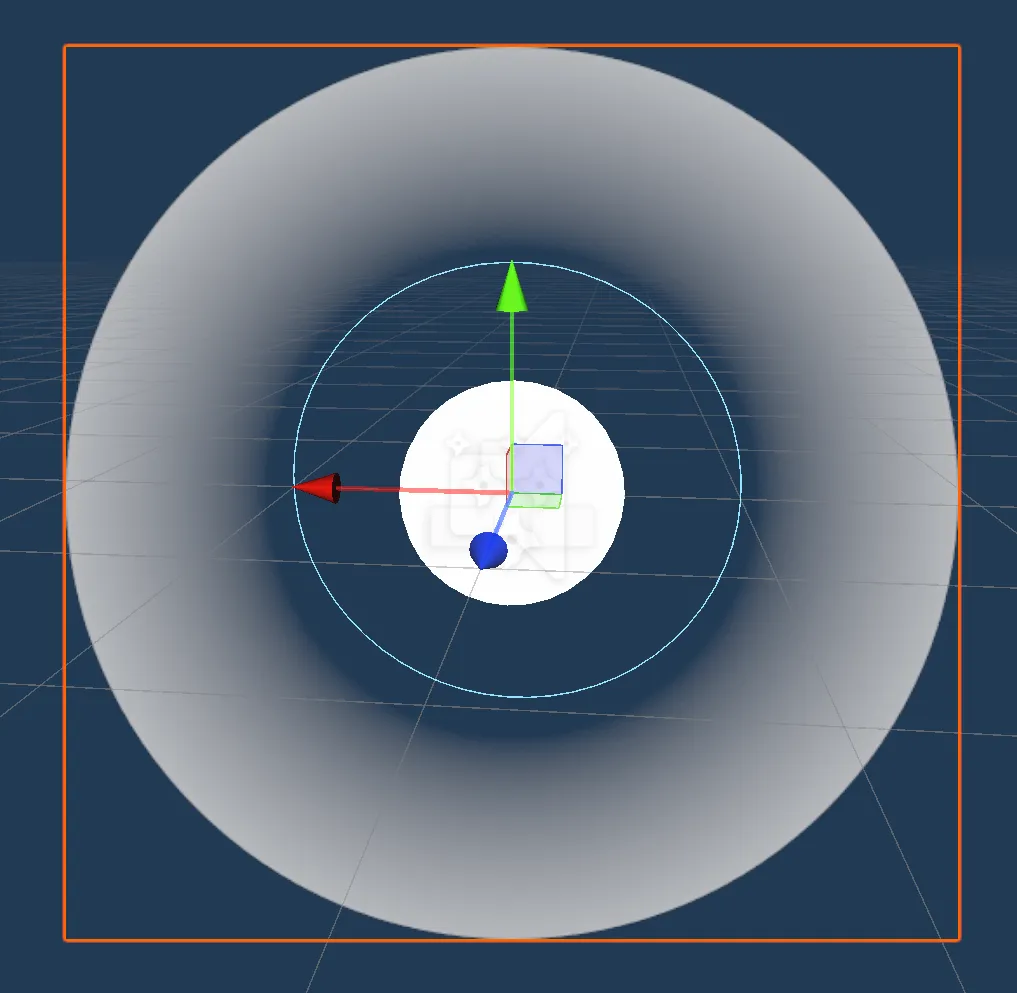

Each Agent has a chance of outputting a vocal-like sound every 5-20 seconds. This is accompanied by a circular disk effect. The visual effect is shown in Figure 6.

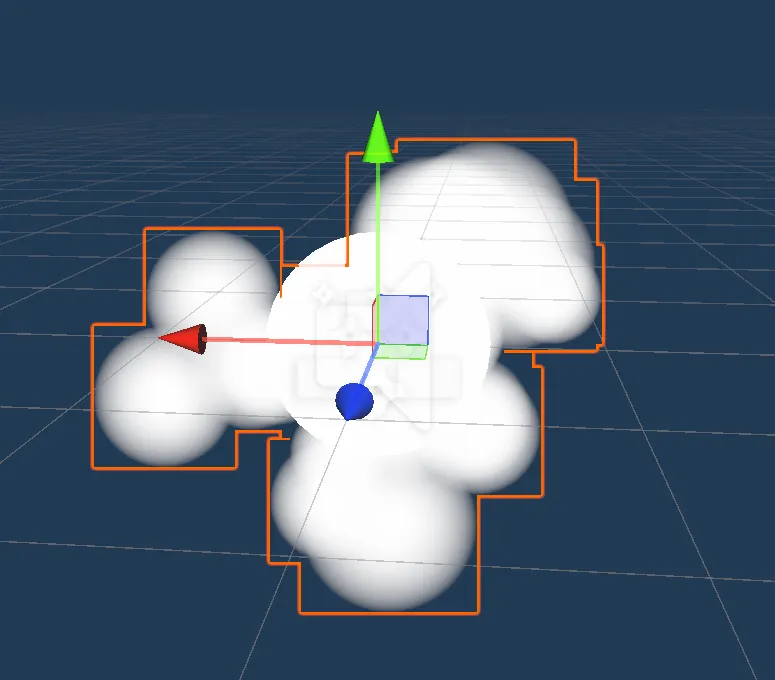

When two agents come close to each other, a guitar-like sound is played. This is accompanied by smaller particles. The visual effect is shown in Figure 7. The sound originates from a nylon guitar, edited for a long attack and decay time.

Post-Processing effects were also applied. These include Tonemapping, which adjusts color levels; Bloom, which gives “glow” to colors; Vignette, which darkens the outside of the screen; and Chromatic Aberration, which offsets red, green, and blue colors for a slight “3D glasses” effect. A screenshot comparing post-processing effects are given in Figure 8.

Other Details

The background was randomly generated, using WWWTryo’s (Space 3D, n.d.) Space 3D cubemap generator tool.

A series of cameras were also set up. One camera orbits the entire scene, one camera shows the world from a static location, and the rest of the cameras follow agents. The video transitions between each camera.

Results

A link to the video can be found at https://youtu.be/PKVFCgHuzNc. A 360/VR version was also recorded, although it lacks line trails and other quality effects. That version can be found at https://youtu.be/PKVFCgHuzNc.

As the scene progresses, agents “call out” (Figure 9) to other agents, with an accompanying sound and visual effect. When an agent past by each other, they “communicate” (Figure 10). When communicating, both agents generate sounds at the same time, forming musical chords. Ambient background audio plays at in the background.

This combination of agents and behaviors forms unique soundscapes and melodies that are unique with every playback.

Reflection

This project has allowed me to explore experimental animation in real-time contexts. While my previous project (experimental image) utilized interaction to manipulate a static and still image, this work does the opposite: it is a real-time game-engine render that requires no interaction from the viewer.

This also gave me an opportunity to explore content where visuals and audio are driven by each other. A good number of animations focus purely on the visual, with audio coming into the picture afterward. Or, the audio is created first, then the visuals reflect that audio. Thus, my work explores something slightly different from the two, as particles and sound rely on and accentuate each other.

In the future, I would like to translate some of the concepts that was utilized here into my own thesis work, which is also integrates audio. In addition, this project leaves room for further exploration and other behaviors of the agents. Some agents may choose to flock together, and others may pursue and avoid others. Additional sound and visual effects could also be implemented, creating greater variations in visual and sound. Lastly, while the background is static, there is also room to explore more dynamic background effects.

References

ADSR explained: How to control synth envelopes in your music. (2023, June 28). Native Instruments Blog. https://blog.native-instruments.com/adsr-explained/

Blumenfeld, P. C., Kempler, T. M., & Krajcik, J. S. (2005). Motivation and Cognitive Engagement in Learning Environments. In R. K. Sawyer (Ed.), The Cambridge Handbook of the Learning Sciences (1st ed., pp. 475–488). Cambridge University Press. https://doi.org/10.1017/CBO9780511816833.029

Carey, N., & Clampitt, D. (1989). Aspects of Well-Formed Scales. Music Theory Spectrum, 11(2), 187–206. https://doi.org/10.2307/745935

Cazden, N. (1945). Musical Consonance and Dissonance: A Cultural Criterion. The Journal of Aesthetics and Art Criticism, 4(1), 3–11. https://doi.org/10.2307/426253

Champandard, A. J., & Dunstan, P. (n.d.). The Behavior Tree Starter Kit.

Colledanchise, M., Parasuraman, R., & Ögren, P. (2019). Learning of Behavior Trees for Autonomous Agents. IEEE Transactions on Games, 11(2), 183–189. IEEE Transactions on Games. https://doi.org/10.1109/TG.2018.2816806

Deutsch, R., & Deutsch, L. J. (1979). ADSR envelope generator. The Journal of the Acoustical Society of America, 66(3), 936. https://doi.org/10.1121/1.383279

Google Doodle Games—Oskar Fischinger’s. (n.d.). Retrieved October 21, 2024, from https://sites.google.com/site/populardoodlegames/oskar-fischinger-s

Kallmann, M. (1999). Steering Behaviors For Autonomous Characters.

Oskar Fischinger’s 117th Birthday Doodle—Google Doodles. (2017, June 22). https://doodles.google/doodle/oskar-fischingers-117th-birthday/

Somberg, G. (2023). Timed ADSRs for One-Shot Sounds. In Game Audio Programming 4. CRC Press.

Space 3D. (n.d.). Retrieved October 23, 2024, from https://tools.wwwtyro.net/space-3d/index.html#animationSpeed=1&fov=80&nebulae=true&pointStars=true&resolution=1024&seed=3o7rrn1rbykg&stars=true&sun=true

Thompson, K. (2005). Optical Poetry: The Life and Work of Oskar Fischinger: Optical Poetry: The Life and Work of Oskar Fischinger. Film Quarterly, 59, 65–66. https://doi.org/10.1525/fq.2005.59.1.65

Tsuji, K., & Müller, S. C. (2021). Intervals and Scales. In K. Tsuji & S. C. Müller (Eds.), Physics and Music: Essential Connections and Illuminating Excursions (pp. 47–70). Springer International Publishing. https://doi.org/10.1007/978-3-030-68676-5_3

Zweifel, P. F. (1996). Generalized Diatonic and Pentatonic Scales: A Group-Theoretic Approach. Perspectives of New Music, 34(1), 140–161. https://doi.org/10.2307/833490

中村昭彦, 木下和彦, & 南條由起. (2022). The Pentatonic Scale Gives Everyone a Chance to Create Music. International Journal of Creativity in Music Education, 09, 42–55. https://doi.org/10.50825/icme.09.0_42